August 2018

Intermediate to advanced

272 pages

7h 2m

English

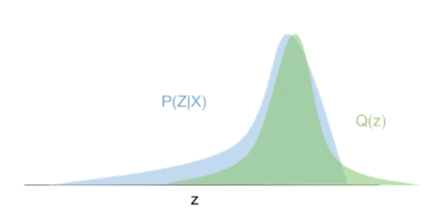

The KL divergence loss is one that will produce a number indicating how close two distributions are to each other.

The closer two distributions get to each other, the lower the loss becomes. In the following graph, the blue distribution is trying to model the green distribution. As the blue distribution comes closer and closer to the green one, the KL divergence loss will get closer to zero.