Chapter 5. Data Preprocessing

The data we use to train our machine learning models is often provided in formats our machine learning models can’t consume. For example, in our example project, a feature we want to use to train our model is available only as Yes and No tags. Any machine learning model requires a numerical representation of these values (e.g., 1 and 0). In this chapter, we will explain how to convert features into consistent numerical representations so that your machine learning model can be trained with the numerical representations of the features.

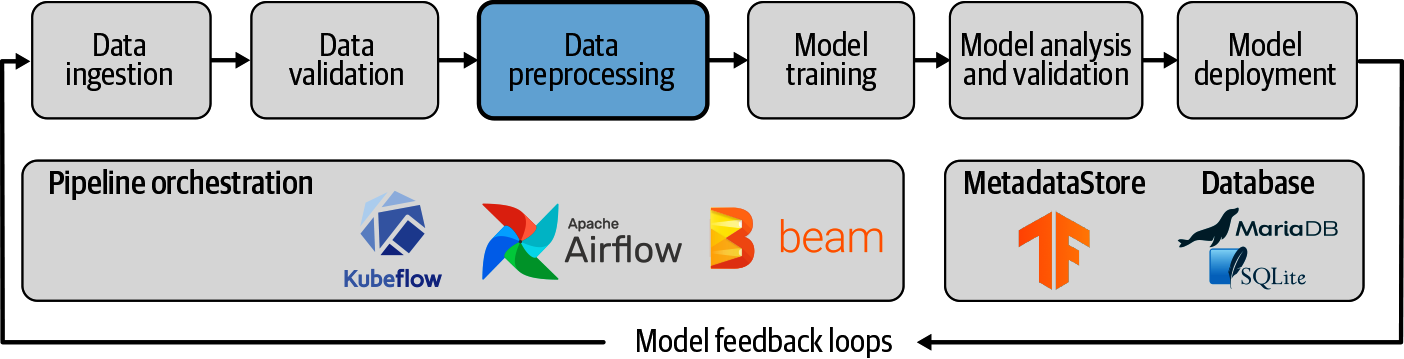

One major aspect that we discuss in this chapter is focusing on consistent preprocessing. As shown in Figure 5-1, the preprocessing takes place after data validation, which we discussed in Chapter 4. TensorFlow Transform (TFT), the TFX component for data preprocessing, allows us to build our preprocessing steps as TensorFlow graphs. In the following sections, we will discuss why and when this is a good workflow and how to export the preprocessing steps. In Chapter 6, we will use the preprocessed datasets and the preserved transformation graph to train and export our machine learning model, respectively.

Figure 5-1. Data preprocessing as part of ML pipelines

Data scientists might see the preprocessing steps expressed as TensorFlow operations (operations) as too much overhead. After all, it requires different implementations ...

Get Building Machine Learning Pipelines now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.