April 2017

Intermediate to advanced

318 pages

7h 40m

English

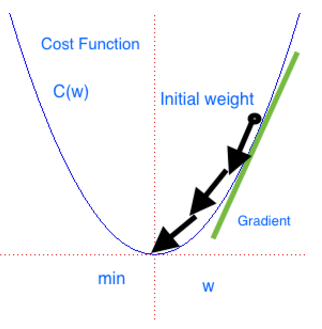

We have defined and used a network; it is useful to start giving an intuition about how networks are trained. Let's focus on one popular training technique known as gradient descent (GD). Imagine a generic cost function C(w) in one single variable w like in the following graph:

The gradient descent can be seen as a hiker who aims at climbing down a mountain into a valley. The mountain represents the function C, while the valley represents the minimum Cmin. The hiker has a starting point w0. The hiker moves little by little. At each step r, the gradient is the direction of maximum increase. Mathematically, ...