April 2017

Intermediate to advanced

318 pages

7h 40m

English

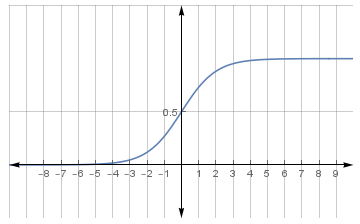

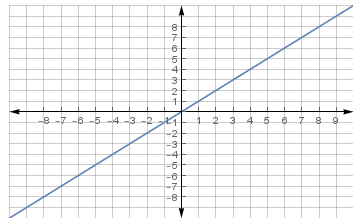

Activation includes commonly used functions such as sigmoid, linear, hyperbolic tangent, and ReLU. We have seen a few examples of activation functions in Chapter 1, Neural Networks Foundations, and more examples will be presented in the next chapters. The following diagrams are examples of sigmoid, linear, hyperbolic tangent, and ReLU activation functions:

|

Sigmoid  |

Linear  |

|

Hyperbolic tangent |

ReLU |