June 2018

Intermediate to advanced

436 pages

10h 33m

English

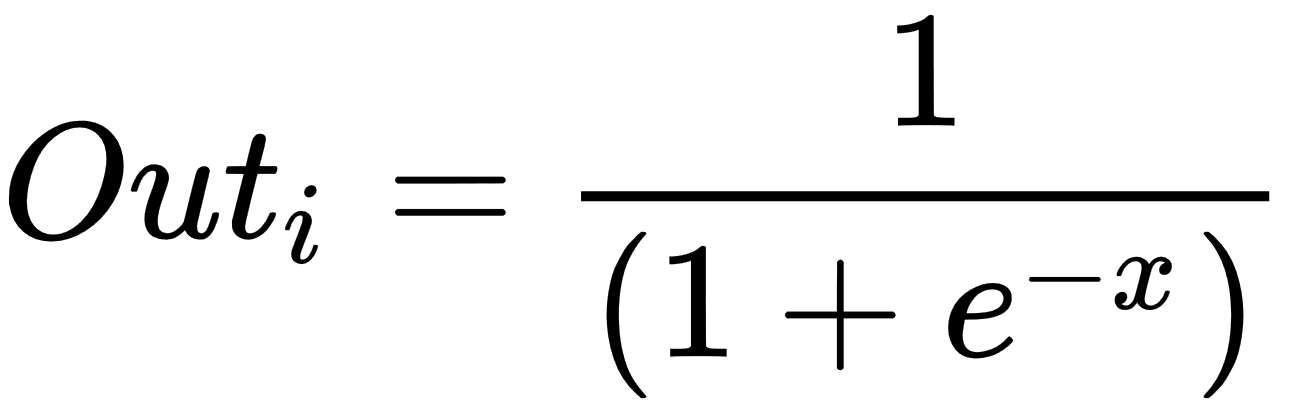

To allow a neural network to learn complex decision boundaries, we apply a non-linear activation function to some of its layers. Commonly used functions include Tanh, ReLU, softmax, and variants of these. More technically, each neuron receives as input signal the weighted sum of the synaptic weights and the activation values of the neurons connected. One of the most widely used functions for this purpose is the so-called sigmoid function. It is a special case of the logistic function, which is defined by the following formula:

The domain of this function includes all real numbers, and the co-domain is (0, 1). This means ...