Stochastic gradient descent

The method we've just seen of calculating gradient descent is often called batch gradient descent, because each update to the coefficients happens inside an iteration over all the data in a single batch. With very large amounts of data, each iteration can be time-consuming and waiting for convergence could take a very long time.

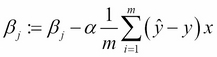

An alternative method of gradient descent is called stochastic gradient descent or SGD. In this method, the estimates of the coefficients are continually updated as the input data is processed. The update method for stochastic gradient descent looks like this:

In fact, this is identical to batch ...

Get Clojure for Data Science now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.