Chapter 3. Vertices and Touch Points

The iPhone has several input devices, including the accelerometer, microphone, and touchscreen, but your application will probably make the most use of the touchscreen. Since the screen has multitouch capabilities, your application can obtain a list of several touch points at any time. In a way, your 3D application is a “point processor”: it consumes points from the touchscreen and produces points (for example, triangle vertices) for OpenGL. So, I thought I’d use the same chapter to both introduce the touchscreen and cover some new ways of submitting vertices to OpenGL.

This chapter also covers some important best practices for vertex submission, such as the usage of vertex buffer objects. I would never argue with the great man who decreed that premature optimization is the root of all evil, but I want to hammer in good habits early on.

Toward the end of the chapter, you’ll learn how to generate some interesting geometry using parametric surfaces. This will form the basis for a fun demo app that you’ll gradually enhance over the course of the next several chapters.

Reading the Touchscreen

In this section, I’ll introduce the touchscreen API by walking through a modification of HelloCone that makes the cone point toward the user’s finger. You’ll need to change the name of the app from HelloCone to TouchCone, since the user now touches the cone instead of merely greeting it. To do this, make a copy of the project folder in Finder, and name the new folder TouchCone. Next, open the Xcode project (it will still have the old name), and select Project→Rename. Change the name to TouchCone, and click Rename.

Apple’s multitouch API is actually much richer

than what we need to expose through our

IRenderingEngine interface. For example, Apple’s API

supports the concept of cancellation, which is useful

to robustly handle situations such as an interruption from a phone call.

For our purposes, a simplified interface to the rendering engine is

sufficient. In fact, we don’t even need to accept multiple touches

simultaneously; the touch handler methods can simply take a single

coordinate.

For starters, let’s add three methods to

IRenderingEngine for “finger up” (the end of a touch),

“finger down” (the beginning of a touch), and “finger move.” Coordinates

are passed to these methods using the ivec2 type from

the vector library we added in RenderingEngine Declaration. Example 3-1 shows the modifications to

IRenderingEngine.hpp (new lines are in bold).

#include "Vector.hpp" ... struct IRenderingEngine { virtual void Initialize(int width, int height) = 0; virtual void Render() const = 0; virtual void UpdateAnimation(float timeStep) = 0; virtual void OnRotate(DeviceOrientation newOrientation) = 0; virtual void OnFingerUp(ivec2 location) = 0; virtual void OnFingerDown(ivec2 location) = 0; virtual void OnFingerMove(ivec2 oldLocation, ivec2 newLocation) = 0; virtual ~IRenderingEngine() {} };

The iPhone notifies your view of touch events

by calling methods on your UIView class, which you can

then override. The three methods we’re interested in overriding are

touchesBegan, touchedEnded, and

touchesMoved. Open GLView.mm, and

implement these methods by simply passing on the coordinates to the

rendering engine:

- (void) touchesBegan: (NSSet*) touches withEvent: (UIEvent*) event

{

UITouch* touch = [touches anyObject];

CGPoint location = [touch locationInView: self];

m_renderingEngine->OnFingerDown(ivec2(location.x, location.y));

}

- (void) touchesEnded: (NSSet*) touches withEvent: (UIEvent*) event

{

UITouch* touch = [touches anyObject];

CGPoint location = [touch locationInView: self];

m_renderingEngine->OnFingerUp(ivec2(location.x, location.y));

}

- (void) touchesMoved: (NSSet*) touches withEvent: (UIEvent*) event

{

UITouch* touch = [touches anyObject];

CGPoint previous = [touch previousLocationInView: self];

CGPoint current = [touch locationInView: self];

m_renderingEngine->OnFingerMove(ivec2(previous.x, previous.y),

ivec2(current.x, current.y));

}

The RenderingEngine1

implementation (Example 3-2) is similar

to HelloCone, but the OnRotate and

UpdateAnimation methods become empty. Example 3-2 also notifies the user that the

cone is active by using glScalef to enlarge the

geometry while the user is touching the screen. New and changed lines in

the class declaration are shown in bold. Note that we’re removing the

Animation structure.

class RenderingEngine1 : public IRenderingEngine {

public:

RenderingEngine1();

void Initialize(int width, int height);

void Render() const;

void UpdateAnimation(float timeStep) {}

void OnRotate(DeviceOrientation newOrientation) {}

void OnFingerUp(ivec2 location);

void OnFingerDown(ivec2 location);

void OnFingerMove(ivec2 oldLocation, ivec2 newLocation);

private:

vector<Vertex> m_cone;

vector<Vertex> m_disk;

GLfloat m_rotationAngle;

GLfloat m_scale;

ivec2 m_pivotPoint;

GLuint m_framebuffer;

GLuint m_colorRenderbuffer;

GLuint m_depthRenderbuffer;

};

RenderingEngine1::RenderingEngine1() : m_rotationAngle(0), m_scale(1)

{

glGenRenderbuffersOES(1, &m_colorRenderbuffer);

glBindRenderbufferOES(GL_RENDERBUFFER_OES, m_colorRenderbuffer);

}

void RenderingEngine1::Initialize(int width, int height)

{

m_pivotPoint = ivec2(width / 2, height / 2);

...

}

void RenderingEngine1::Render() const

{

glClearColor(0.5f, 0.5f, 0.5f, 1);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glPushMatrix();

glEnableClientState(GL_VERTEX_ARRAY);

glEnableClientState(GL_COLOR_ARRAY);

glRotatef(m_rotationAngle, 0, 0, 1); // Replaces call to rotation()

glScalef(m_scale, m_scale, m_scale); // Replaces call to glMultMatrixf()

// Draw the cone.

glVertexPointer(3, GL_FLOAT, sizeof(Vertex), &m_cone[0].Position.x);

glColorPointer(4, GL_FLOAT, sizeof(Vertex), &m_cone[0].Color.x);

glDrawArrays(GL_TRIANGLE_STRIP, 0, m_cone.size());

// Draw the disk that caps off the base of the cone.

glVertexPointer(3, GL_FLOAT, sizeof(Vertex), &m_disk[0].Position.x);

glColorPointer(4, GL_FLOAT, sizeof(Vertex), &m_disk[0].Color.x);

glDrawArrays(GL_TRIANGLE_FAN, 0, m_disk.size());

glDisableClientState(GL_VERTEX_ARRAY);

glDisableClientState(GL_COLOR_ARRAY);

glPopMatrix();

}

void RenderingEngine1::OnFingerUp(ivec2 location)

{

m_scale = 1.0f;

}

void RenderingEngine1::OnFingerDown(ivec2 location)

{

m_scale = 1.5f;

OnFingerMove(location, location);

}

void RenderingEngine1::OnFingerMove(ivec2 previous, ivec2 location)

{

vec2 direction = vec2(location - m_pivotPoint).Normalized();

// Flip the y-axis because pixel coords increase toward the bottom.

direction.y = -direction.y;

m_rotationAngle = std::acos(direction.y) * 180.0f / 3.14159f;

if (direction.x > 0)

m_rotationAngle = -m_rotationAngle;

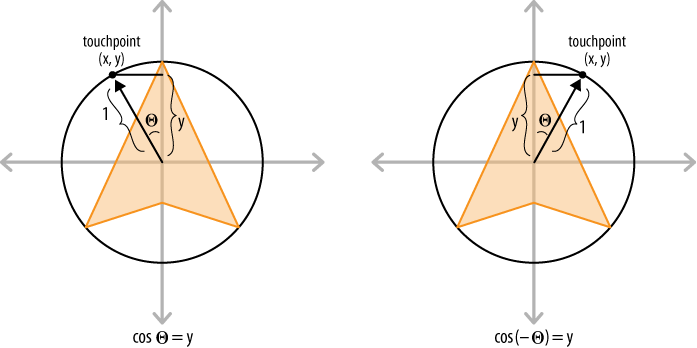

}The only bit of code in Example 3-2 that might need some extra

explanation is the OnFingerMove method; it uses some

trigonometric trickery to compute the angle of rotation. The best way to

explain this is with a diagram, as shown in Figure 3-1. Recall from high-school trig that the cosine is

“adjacent over hypotenuse.” We normalized the direction vector, so we know

the hypotenuse length is exactly one. Since cos(θ)=y,

then acos(y)=θ. If the direction vector points toward

the right of the screen, then the rotation angle should be reversed, as

illustrated on the right. This is because rotation angles are

counterclockwise in our coordinate system.

Note that OnFingerMove flips

the y-axis. The pixel-space coordinates that come from

UIView have the origin at the upper-left corner of the

screen, with +Y pointing downward, while OpenGL (and mathematicians)

prefer to have the origin at the center, with +Y pointing

upward.

That’s it! The 1.1 ES version of the Touch Cone

app is now functionally complete. If you want to compile and run at this

point, don’t forget to turn on the ForceES1 switch at

the top of GLView.mm.

Let’s move on to the ES 2.0 renderer. Open

RenderingEngine2.cpp, and make the changes shown in

bold in Example 3-3. Most of these

changes are carried over from our ES 1.1 changes, with some minor

differences in the Render method.

class RenderingEngine2 : public IRenderingEngine {

public:

RenderingEngine2();

void Initialize(int width, int height);

void Render() const;

void UpdateAnimation(float timeStep) {}

void OnRotate(DeviceOrientation newOrientation) {}

void OnFingerUp(ivec2 location);

void OnFingerDown(ivec2 location);

void OnFingerMove(ivec2 oldLocation, ivec2 newLocation);

private:

...

GLfloat m_rotationAngle;

GLfloat m_scale;

ivec2 m_pivotPoint;

};

RenderingEngine2::RenderingEngine2() : m_rotationAngle(0), m_scale(1)

{

glGenRenderbuffersOES(1, &m_colorRenderbuffer);

glBindRenderbufferOES(GL_RENDERBUFFER_OES, m_colorRenderbuffer);

}

void RenderingEngine2::Initialize(int width, int height)

{

m_pivotPoint = ivec2(width / 2, height / 2);

...

}

void RenderingEngine2::Render() const

{

GLuint positionSlot = glGetAttribLocation(m_simpleProgram,

"Position");

GLuint colorSlot = glGetAttribLocation(m_simpleProgram,

"SourceColor");

glClearColor(0.5f, 0.5f, 0.5f, 1);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glEnableVertexAttribArray(positionSlot);

glEnableVertexAttribArray(colorSlot);

mat4 rotation = mat4::Rotate(m_rotationAngle);

mat4 scale = mat4::Scale(m_scale);

mat4 translation = mat4::Translate(0, 0, -7);

// Set the model-view matrix.

GLint modelviewUniform = glGetUniformLocation(m_simpleProgram,

"Modelview");

mat4 modelviewMatrix = scale * rotation * translation;

glUniformMatrix4fv(modelviewUniform, 1, 0, modelviewMatrix.Pointer());

// Draw the cone.

{

GLsizei stride = sizeof(Vertex);

const GLvoid* pCoords = &m_cone[0].Position.x;

const GLvoid* pColors = &m_cone[0].Color.x;

glVertexAttribPointer(positionSlot, 3, GL_FLOAT,

GL_FALSE, stride, pCoords);

glVertexAttribPointer(colorSlot, 4, GL_FLOAT,

GL_FALSE, stride, pColors);

glDrawArrays(GL_TRIANGLE_STRIP, 0, m_cone.size());

}

// Draw the disk that caps off the base of the cone.

{

GLsizei stride = sizeof(Vertex);

const GLvoid* pCoords = &m_disk[0].Position.x;

const GLvoid* pColors = &m_disk[0].Color.x;

glVertexAttribPointer(positionSlot, 3, GL_FLOAT,

GL_FALSE, stride, pCoords);

glVertexAttribPointer(colorSlot, 4, GL_FLOAT,

GL_FALSE, stride, pColors);

glDrawArrays(GL_TRIANGLE_FAN, 0, m_disk.size());

}

glDisableVertexAttribArray(positionSlot);

glDisableVertexAttribArray(colorSlot);

}

// See Example 3-2 for OnFingerUp, OnFingerDown, and OnFingerMove.

...You can now turn off the

ForceES1 switch in GLView.mm and

build and run TouchCone on any Apple device. In the following sections,

we’ll continue making improvements to the app, focusing on how to

efficiently describe the cone geometry.

Saving Memory with Vertex Indexing

So far we’ve been using the

glDrawArrays function for all our rendering. OpenGL ES

offers another way of kicking off a sequence of triangles (or lines or

points) through the use of the glDrawElements function.

It has much the same effect as glDrawArrays, but

instead of simply plowing forward through the vertex list, it first reads

a list of indices from an index buffer and then uses

those indices to choose vertices from the vertex buffer.

To help explain indexing and how it’s useful,

let’s go back to the simple “square from two triangles” example from the

previous chapter (Figure 2-3). Here’s one way of

rendering the square with glDrawArrays:

vec2 vertices[6] = { vec2(0, 0), vec2(0, 1), vec2(1, 1),

vec2(1, 1), vec2(1, 0), vec2(0, 0) };

glVertexPointer(2, GL_FLOAT, sizeof(vec2), (void*) vertices);

glDrawArrays(GL_TRIANGLES, 0, 6);

Note that two vertices—(0, 0) and (1, 1)—appear twice in the vertex list. Vertex indexing can eliminate this redundancy. Here’s how:

vec2 vertices[4] = { vec2(0, 0), vec2(0, 1), vec2(1, 1), vec2(1, 0) };

GLubyte indices[6] = { 0, 1, 2, 2, 3, 0};

glVertexPointer(2, GL_FLOAT, sizeof(vec2), vertices);

glDrawElements(GL_TRIANGLES, 6, GL_UNSIGNED_BYTE, (void*) indices);

So, instead of sending 6 vertices to OpenGL (8

bytes per vertex), we’re now sending 4 vertices plus 6 indices (one byte

per index). That’s a total of 48 bytes with glDrawArrays and 38 bytes with

glDrawIndices.

You might be thinking “But I can just use a

triangle strip with glDrawArrays and save just as much

memory!” That’s true in this case. In fact, a triangle strip is the best

way to draw our lonely little square:

vec2 vertices[6] = { vec2(0, 0), vec2(0, 1), vec2(1, 0), vec2(1, 1) };

glVertexPointer(2, GL_FLOAT, sizeof(vec2), (void*) vertices);

glDrawArrays(GL_TRIANGLE_STRIP, 0, 4);

That’s only 48 bytes, and adding an index buffer would buy us nothing.

However, more complex geometry (such as our

cone model) usually involves even more repetition of vertices, so an index

buffer offers much better savings. Moreover, GL_TRIANGLE_STRIP is great in certain

cases, but in general it isn’t as versatile as GL_TRIANGLES. With

GL_TRIANGLES, a single draw call can be used to render

multiple disjoint pieces of geometry. To achieve best performance with

OpenGL, execute as few draw calls per frame as possible.

Let’s walk through the process of updating

Touch Cone to use indexing. Take a look at these two lines in the class

declaration of RenderingEngine1:

vector<Vertex> m_cone; vector<Vertex> m_disk;

Indexing allows you to combine these two

arrays, but it also requires a new array for holding the indices. OpenGL

ES supports two types of indices: GLushort (16 bit) and

GLubyte (8 bit). In this case, there are fewer than 256

vertices, so you can use GLubyte for best efficiency.

Replace those two lines with the following:

vector<Vertex> m_coneVertices; vector<GLubyte> m_coneIndices; GLuint m_bodyIndexCount; GLuint m_diskIndexCount;

Since the index buffer is partitioned into two

parts (body and disk), we also added some counts that will get passed to

glDrawElements, as you’ll see later.

Next you need to update the code that generates

the geometry. With indexing, the number of required vertices for our cone

shape is n*2+1, where n is the

number of slices. There are n vertices at the apex,

another n vertices at the rim, and one vertex for the

center of the base. Example 3-4 shows

how to generate the vertices. This code goes inside the

Initialize method of the rendering engine class; before

you insert it, delete everything between m_pivotPoint =

ivec2(width / 2, height / 2); and // Create the depth

buffer.

const float coneRadius = 0.5f;

const float coneHeight = 1.866f;

const int coneSlices = 40;

const float dtheta = TwoPi / coneSlices;

const int vertexCount = coneSlices * 2 + 1;

m_coneVertices.resize(vertexCount);

vector<Vertex>::iterator vertex = m_coneVertices.begin();

// Cone's body

for (float theta = 0; vertex != m_coneVertices.end() - 1; theta += dtheta) {

// Grayscale gradient

float brightness = abs(sin(theta));

vec4 color(brightness, brightness, brightness, 1);

// Apex vertex

vertex->Position = vec3(0, 1, 0);

vertex->Color = color;

vertex++;

// Rim vertex

vertex->Position.x = coneRadius * cos(theta);

vertex->Position.y = 1 - coneHeight;

vertex->Position.z = coneRadius * sin(theta);

vertex->Color = color;

vertex++;

}

// Disk center

vertex->Position = vec3(0, 1 - coneHeight, 0);

vertex->Color = vec4(1, 1, 1, 1);

In addition to the vertices, you need to store indices for 2n triangles, which requires a total of 6n indices.

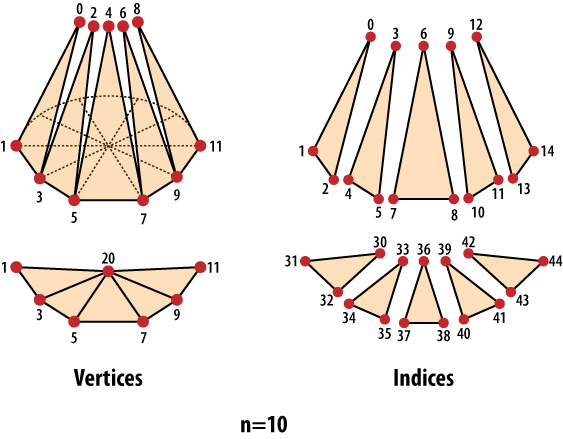

Figure 3-2 uses exploded views to show the tessellation of a cone with n = 10. The image on the left depicts the ordering of the vertex buffer; the image on the right depicts the ordering of the index buffer. Note that each vertex at the rim is shared between four different triangles; that’s the power of indexing! Remember, the vertices at the apex cannot be shared because each of those vertices requires a unique color attribute, as discussed in the previous chapter (see Figure 2-17).

Example 3-5

shows the code for generating indices (again, this code lives in our

Initialize method). Note the usage

of the modulo operator to wrap the indices back to the start of the

array.

m_bodyIndexCount = coneSlices * 3;

m_diskIndexCount = coneSlices * 3;

m_coneIndices.resize(m_bodyIndexCount + m_diskIndexCount);

vector<GLubyte>::iterator index = m_coneIndices.begin();

// Body triangles

for (int i = 0; i < coneSlices * 2; i += 2) {

*index++ = i;

*index++ = (i + 1) % (2 * coneSlices);

*index++ = (i + 3) % (2 * coneSlices);

}

// Disk triangles

const int diskCenterIndex = vertexCount - 1;

for (int i = 1; i < coneSlices * 2 + 1; i += 2) {

*index++ = diskCenterIndex;

*index++ = i;

*index++ = (i + 2) % (2 * coneSlices);

}

Now it’s time to enter the new

Render() method, shown in Example 3-6. Take a close look at the core of the

rendering calls (in bold). Recall that the body of the cone has a

grayscale gradient, but the cap is solid white. The

draw call that renders the body should heed the color

values specified in the vertex array, but the draw call for the disk

should not. So, between the two calls to

glDrawElements, the GL_COLOR_ARRAY

attribute is turned off with glDisableClientState, and

the color is explicitly set with glColor4f. Replace the

definition of Render() in its entirety with the code in

Example 3-6.

void RenderingEngine1::Render() const

{

GLsizei stride = sizeof(Vertex);

const GLvoid* pCoords = &m_coneVertices[0].Position.x;

const GLvoid* pColors = &m_coneVertices[0].Color.x;

glClearColor(0.5f, 0.5f, 0.5f, 1);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glPushMatrix();

glRotatef(m_rotationAngle, 0, 0, 1);

glScalef(m_scale, m_scale, m_scale);

glVertexPointer(3, GL_FLOAT, stride, pCoords);

glColorPointer(4, GL_FLOAT, stride, pColors);

glEnableClientState(GL_VERTEX_ARRAY);

const GLvoid* bodyIndices = &m_coneIndices[0];

const GLvoid* diskIndices = &m_coneIndices[m_bodyIndexCount];

glEnableClientState(GL_COLOR_ARRAY);

glDrawElements(GL_TRIANGLES, m_bodyIndexCount, GL_UNSIGNED_BYTE, bodyIndices);

glDisableClientState(GL_COLOR_ARRAY);

glColor4f(1, 1, 1, 1);

glDrawElements(GL_TRIANGLES, m_diskIndexCount, GL_UNSIGNED_BYTE, diskIndices);

glDisableClientState(GL_VERTEX_ARRAY);

glPopMatrix();

}

You should be able to build and run at this

point. Next, modify the ES 2.0 backend by making the same changes we just

went over. The only tricky part is the Render method,

shown in Example 3-7. From a 30,000-foot

view, it basically does the same thing as its ES 1.1 counterpart, but with

some extra footwork at the beginning for setting up the transformation

state.

void RenderingEngine2::Render() const

{

GLuint positionSlot = glGetAttribLocation(m_simpleProgram, "Position");

GLuint colorSlot = glGetAttribLocation(m_simpleProgram, "SourceColor");

mat4 rotation = mat4::Rotate(m_rotationAngle);

mat4 scale = mat4::Scale(m_scale);

mat4 translation = mat4::Translate(0, 0, -7);

GLint modelviewUniform = glGetUniformLocation(m_simpleProgram, "Modelview");

mat4 modelviewMatrix = scale * rotation * translation;

GLsizei stride = sizeof(Vertex);

const GLvoid* pCoords = &m_coneVertices[0].Position.x;

const GLvoid* pColors = &m_coneVertices[0].Color.x;

glClearColor(0.5f, 0.5f, 0.5f, 1);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glUniformMatrix4fv(modelviewUniform, 1, 0, modelviewMatrix.Pointer());

glVertexAttribPointer(positionSlot, 3, GL_FLOAT, GL_FALSE, stride, pCoords);

glVertexAttribPointer(colorSlot, 4, GL_FLOAT, GL_FALSE, stride, pColors);

glEnableVertexAttribArray(positionSlot);

const GLvoid* bodyIndices = &m_coneIndices[0];

const GLvoid* diskIndices = &m_coneIndices[m_bodyIndexCount];

glEnableVertexAttribArray(colorSlot);

glDrawElements(GL_TRIANGLES, m_bodyIndexCount, GL_UNSIGNED_BYTE, bodyIndices);

glDisableVertexAttribArray(colorSlot);

glVertexAttrib4f(colorSlot, 1, 1, 1, 1);

glDrawElements(GL_TRIANGLES, m_diskIndexCount, GL_UNSIGNED_BYTE, diskIndices);

glDisableVertexAttribArray(positionSlot);

}

That covers the basics of index buffers; we managed to reduce the memory footprint by about 28% over the nonindexed approach. Optimizations like this don’t matter much for silly demo apps like this one, but applying them to real-world apps can make a big difference.

Boosting Performance with Vertex Buffer Objects

OpenGL provides a mechanism called vertex buffer objects (often known as VBOs) whereby you give it ownership of a set of vertices (and/or indices), allowing you to free up CPU memory and avoid frequent CPU-to-GPU transfers. Using VBOs is such a highly recommended practice that I considered using them even in the HelloArrow sample. Going forward, all sample code in this book will use VBOs.

Let’s walk through the steps required to add

VBOs to Touch Cone. First, remove these two lines from the

RenderingEngine class declaration:

vector<Vertex> m_coneVertices; vector<GLubyte> m_coneIndices;

They’re no longer needed because the vertex

data will be stored in OpenGL memory. You do, however, need to store the

handles to the vertex buffer objects. Object handles in OpenGL are of type

GLuint. So, add these two lines to the class

declaration:

GLuint m_vertexBuffer; GLuint m_indexBuffer;

The vertex generation code in the

Initialize method stays the same except that you should

use a temporary variable rather than a class member for storing the vertex

list. Specifically, replace this snippet:

m_coneVertices.resize(vertexCount);

vector<Vertex>::iterator vertex = m_coneVertices.begin();

// Cone's body

for (float theta = 0; vertex != m_coneVertices.end() - 1; theta += dtheta) {

...

m_coneIndices.resize(m_bodyIndexCount + m_diskIndexCount);

vector<GLubyte>::iterator index = m_coneIndices.begin();

vector<Vertex> coneVertices(vertexCount);

vector<Vertex>::iterator vertex = coneVertices.begin();

// Cone's body

for (float theta = 0; vertex != coneVertices.end() - 1; theta += dtheta) {

...

vector<GLubyte> coneIndices(m_bodyIndexCount + m_diskIndexCount);

vector<GLubyte>::iterator index = coneIndices.begin();Next you need to create the vertex buffer objects and populate them. This is done with some OpenGL function calls that follow the same Gen/Bind pattern that you’re already using for framebuffer objects. The Gen/Bind calls for VBOs are shown here (don’t add these snippets to the class just yet):

void glGenBuffers(GLsizei count, GLuint* handles); void glBindBuffer(GLenum target, GLuint handle);

glGenBuffers generates a

list of nonzero handles. count specifies the

desired number of handles; handles points to a

preallocated list. In this book we often generate only one handle at a

time, so be aware that the glGen* functions can also be

used to efficiently generate several handles at once.

The glBindBuffer function

attaches a VBO to one of two binding points specified with the

target parameter. The legal values for

target are

GL_ELEMENT_ARRAY_BUFFER (used for indices) and

GL_ARRAY_BUFFER (used for vertices).

Populating a VBO that’s already attached to one of the two binding points is accomplished with this function call:

void glBufferData(GLenum target, GLsizeiptr size,

const GLvoid* data, GLenum usage);target is the same as it

is in glBindBuffer, size is the

number of bytes in the VBO (GLsizeiptr is a typedef of

int), data points to the source

memory, and usage gives a hint to OpenGL about how

you intend to use the VBO. The possible values for

usage are as follows:

- GL_STATIC_DRAW

This is what we’ll commonly use in this book; it tells OpenGL that the buffer never changes.

- GL_DYNAMIC_DRAW

This tells OpenGL that the buffer will be periodically updated using

glBufferSubData.- GL_STREAM_DRAW (ES 2.0 only)

This tells OpenGL that the buffer will be frequently updated (for example, once per frame) with

glBufferSubData.

To modify the contents of an existing VBO, you

can use glBufferSubData:

void glBufferSubData(GLenum target, GLintptr offset,

GLsizeiptr size, const GLvoid* data); The only difference between this and

glBufferData is the offset

parameter, which specifies a number of bytes from the start of the VBO.

Note that glBufferSubData should be used only to update

a VBO that has previously been initialized with

glBufferData.

We won’t be using

glBufferSubData in any of the samples in this book.

Frequent updates with glBufferSubData should be avoided

for best performance, but in many scenarios it can be very useful.

Getting back to Touch Cone, let’s add code to

create and populate the VBOs near the end of the

Initialize method:

// Create the VBO for the vertices.

glGenBuffers(1, &m_vertexBuffer);

glBindBuffer(GL_ARRAY_BUFFER, m_vertexBuffer);

glBufferData(GL_ARRAY_BUFFER,

coneVertices.size() * sizeof(coneVertices[0]),

&coneVertices[0],

GL_STATIC_DRAW);

// Create the VBO for the indices.

glGenBuffers(1, &m_indexBuffer);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, m_indexBuffer);

glBufferData(GL_ELEMENT_ARRAY_BUFFER,

coneIndices.size() * sizeof(coneIndices[0]),

&coneIndices[0],

GL_STATIC_DRAW);Before showing you how to use VBOs for

rendering, let me refresh your memory on the gl*Pointer

functions that you’ve been using in the Render

method:

// ES 1.1 glVertexPointer(3, GL_FLOAT, stride, pCoords); glColorPointer(4, GL_FLOAT, stride, pColors); // ES 2.0 glVertexAttribPointer(positionSlot, 3, GL_FLOAT, GL_FALSE, stride, pCoords); glVertexAttribPointer(colorSlot, 4, GL_FLOAT, GL_FALSE, stride, pColors);

The formal declarations for these functions look like this:

// From <OpenGLES/ES1/gl.h>

void glVertexPointer(GLint size, GLenum type, GLsizei stride, const GLvoid* pointer);

void glColorPointer(GLint size, GLenum type, GLsizei stride, const GLvoid* pointer);

void glNormalPointer(GLenum type, GLsizei stride, const GLvoid* pointer);

void glTexCoordPointer(GLint size, GLenum type, GLsizei stride, const GLvoid* pointer);

void glPointSizePointerOES(GLenum type, GLsizei stride, const GLvoid* pointer);

// From <OpenGLES/ES2/gl.h>

void glVertexAttribPointer(GLuint attributeIndex, GLint size, GLenum type,

GLboolean normalized, GLsizei stride,

const GLvoid* pointer);

The size parameter in

all these functions controls the number of vector components per

attribute. (The legal combinations of size and

type were covered in the previous chapter in Table 2-1.) The stride parameter is

the number of bytes between vertices. The pointer

parameter is the one to watch out for—when no VBOs are bound (that is, the

current VBO binding is zero), it’s a pointer to CPU memory; when a VBO is

bound to GL_ARRAY_BUFFER, it changes meaning and

becomes a byte offset rather than a pointer.

The gl*Pointer functions are

used to set up vertex attributes, but recall that indices are submitted

through the last argument of glDrawElements. Here’s the

formal declaration of glDrawElements:

void glDrawElements(GLenum topology, GLsizei count, GLenum type, GLvoid* indices);

indices is another

“chameleon” parameter. When a nonzero VBO is bound to GL_ELEMENT_ARRAY_BUFFER, it’s a byte

offset; otherwise, it’s a pointer to CPU memory.

Note

The shape-shifting aspect of

gl*Pointer and glDrawElements is

an indicator of how OpenGL has grown organically through the years; if

the API were designed from scratch, perhaps these functions wouldn’t be

so overloaded.

To see glDrawElements and

gl*Pointer being used with VBOs in Touch Cone, check

out the Render method in Example 3-8.

void RenderingEngine1::Render() const

{

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glPushMatrix();

glRotatef(m_rotationAngle, 0, 0, 1);

glScalef(m_scale, m_scale, m_scale);

const GLvoid* colorOffset = (GLvoid*) sizeof(vec3);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, m_indexBuffer);

glBindBuffer(GL_ARRAY_BUFFER, m_vertexBuffer);

glVertexPointer(3, GL_FLOAT, sizeof(Vertex), 0);

glColorPointer(4, GL_FLOAT, sizeof(Vertex), colorOffset);

glEnableClientState(GL_VERTEX_ARRAY);

const GLvoid* bodyOffset = 0;

const GLvoid* diskOffset = (GLvoid*) m_bodyIndexCount;

glEnableClientState(GL_COLOR_ARRAY);

glDrawElements(GL_TRIANGLES, m_bodyIndexCount, GL_UNSIGNED_BYTE, bodyOffset);

glDisableClientState(GL_COLOR_ARRAY);

glColor4f(1, 1, 1, 1);

glDrawElements(GL_TRIANGLES, m_diskIndexCount, GL_UNSIGNED_BYTE, diskOffset);

glDisableClientState(GL_VERTEX_ARRAY);

glPopMatrix();

}Example 3-9 shows the ES 2.0 variant. From 30,000 feet, it basically does the same thing, even though many of the actual OpenGL calls are different.

void RenderingEngine2::Render() const

{

GLuint positionSlot = glGetAttribLocation(m_simpleProgram, "Position");

GLuint colorSlot = glGetAttribLocation(m_simpleProgram, "SourceColor");

mat4 rotation = mat4::Rotate(m_rotationAngle);

mat4 scale = mat4::Scale(m_scale);

mat4 translation = mat4::Translate(0, 0, -7);

GLint modelviewUniform = glGetUniformLocation(m_simpleProgram, "Modelview");

mat4 modelviewMatrix = scale * rotation * translation;

GLsizei stride = sizeof(Vertex);

const GLvoid* colorOffset = (GLvoid*) sizeof(vec3);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glUniformMatrix4fv(modelviewUniform, 1, 0, modelviewMatrix.Pointer());

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, m_indexBuffer);

glBindBuffer(GL_ARRAY_BUFFER, m_vertexBuffer);

glVertexAttribPointer(positionSlot, 3, GL_FLOAT, GL_FALSE, stride, 0);

glVertexAttribPointer(colorSlot, 4, GL_FLOAT, GL_FALSE, stride, colorOffset);

glEnableVertexAttribArray(positionSlot);

const GLvoid* bodyOffset = 0;

const GLvoid* diskOffset = (GLvoid*) m_bodyIndexCount;

glEnableVertexAttribArray(colorSlot);

glDrawElements(GL_TRIANGLES, m_bodyIndexCount, GL_UNSIGNED_BYTE, bodyOffset);

glDisableVertexAttribArray(colorSlot);

glVertexAttrib4f(colorSlot, 1, 1, 1, 1);

glDrawElements(GL_TRIANGLES, m_diskIndexCount, GL_UNSIGNED_BYTE, diskOffset);

glDisableVertexAttribArray(positionSlot);

}That wraps up the tutorial on VBOs; we’ve taken the Touch Cone sample as far as we can take it!

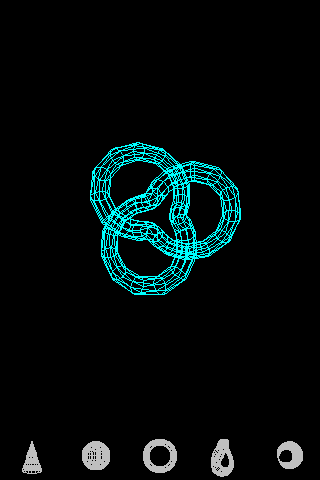

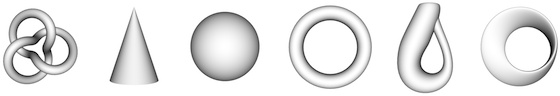

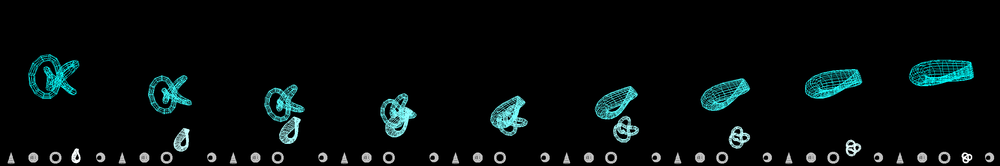

Creating a Wireframe Viewer

Let’s use vertex buffer objects and the

touchscreen to create a fun new app. Instead of relying on triangles like

we’ve been doing so far, we’ll use GL_LINES topology to

create a simple wireframe viewer, as shown in Figure 3-3. The rotation in Touch Cone was

restricted to the plane, but this app will let you spin the geometry

around to any orientation; behind the scenes, we’ll use quaternions to

achieve a trackball-like effect. Additionally, we’ll include a row of

buttons along the bottom of the screen to allow the user to switch between

different shapes. They won’t be true buttons in the UIKit sense; remember,

for best performance, you should let OpenGL do all the rendering. This

application will provide a good foundation upon which to learn many OpenGL

concepts, and we’ll continue to evolve it in the coming

chapters.

If you’re planning on following along with the code, you’ll first need to start with the WireframeSkeleton project from this book’s example code (available at http://oreilly.com/catalog/9780596804831). In the Finder, make a copy of the directory that contains this project, and name the new directory SimpleWireframe. Next, open the project (it will still be named WireframeSkeleton), and then choose Project→Rename. Rename it to SimpleWireframe.

This skeleton project includes all the building

blocks you saw (the vector library from the appendix, the

GLView class, and the application delegate). There are

a few differences between this and the previous examples, so be sure to

look over the classes in the project before you proceed:

The application delegate has been renamed to have a very generic name, AppDelegate.

The

GLViewclass uses an application engine rather than a rendering engine. This is because we’ll be taking a new approach to how we factor the ES 1.1– and ES 2.0–specific code from the rest of the project; more on this shortly.

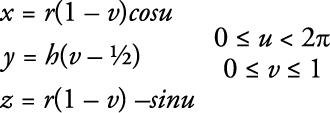

Parametric Surfaces for Fun

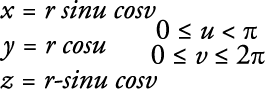

You might have been put off by all the work required for tessellating the cone shape in the previous samples. It would be painful if you had to figure out a clever tessellation for every shape that pops into your head! Thankfully, most 3D modeling software can export to a format that has post-tessellated content; the popular .obj file format is one example of this. Moreover, the cone shape happens to be a mathematically defined shape called a parametric surface; all parametric surfaces are relatively easy to tessellate in a generic manner. A parametric surface is defined with a function that takes a 2D vector for input and produces a 3D vector as output. This turns out to be especially convenient because the input vectors can also be used as texture coordinates, as we’ll learn in a future chapter.

The input to a parametric function is said to be in its domain, while the output is said to be in its range. Since all parametric surfaces can be used to generate OpenGL vertices in a consistent manner, it makes sense to create a simple class hierarchy for them. Example 3-10 shows two subclasses: a cone and a sphere. This has been included in the WireframeSkeleton project for your convenience, so there is no need for you to add it here.

#include "ParametricSurface.hpp"

class Cone : public ParametricSurface {

public:

Cone(float height, float radius) : m_height(height), m_radius(radius)

{

ParametricInterval interval = { ivec2(20, 20), vec2(TwoPi, 1) };

SetInterval(interval);

}

vec3 Evaluate(const vec2& domain) const

{

float u = domain.x, v = domain.y;

float x = m_radius * (1 - v) * cos(u);

float y = m_height * (v - 0.5f);

float z = m_radius * (1 - v) * -sin(u);

return vec3(x, y, z);

}

private:

float m_height;

float m_radius;

};

class Sphere : public ParametricSurface {

public:

Sphere(float radius) : m_radius(radius)

{

ParametricInterval interval = { ivec2(20, 20), vec2(Pi, TwoPi) };

SetInterval(interval);

}

vec3 Evaluate(const vec2& domain) const

{

float u = domain.x, v = domain.y;

float x = m_radius * sin(u) * cos(v);

float y = m_radius * cos(u);

float z = m_radius * -sin(u) * sin(v);

return vec3(x, y, z);

}

private:

float m_radius;

};

// ...The classes in Example 3-10

request their desired tessellation granularity and domain bound by

calling SetInterval from their constructors. More

importantly, these classes implement the pure virtual

Evaluate method, which simply applies Equation 3-1 or 3-2.

Each of the previous equations is only one of several possible parameterizations for their respective shapes. For example, the z equation for the sphere could be negated, and it would still describe a sphere.

In addition to the cone and sphere, the wireframe viewer allows the user to see four other interesting parametric surfaces: a torus, a knot, a Möbius strip,[3] and a Klein bottle (see Figure 3-4). I’ve already shown you the classes for the sphere and cone; you can find code for the other shapes at this book’s website. They basically do nothing more than evaluate various well-known parametric equations. Perhaps more interesting is their common base class, shown in Example 3-11. To add this file to Xcode, right-click the Classes folder, choose Add→New file, select C and C++, and choose Header File. Call it ParametricSurface.hpp, and replace everything in it with the code shown here.

#include "Interfaces.hpp"

struct ParametricInterval {

ivec2 Divisions; vec2 UpperBound;

vec2 UpperBound; };

class ParametricSurface : public ISurface {

public:

int GetVertexCount() const;

int GetLineIndexCount() const;

void GenerateVertices(vector<float>& vertices) const;

void GenerateLineIndices(vector<unsigned short>& indices) const;

protected:

void SetInterval(const ParametricInterval& interval);

};

class ParametricSurface : public ISurface {

public:

int GetVertexCount() const;

int GetLineIndexCount() const;

void GenerateVertices(vector<float>& vertices) const;

void GenerateLineIndices(vector<unsigned short>& indices) const;

protected:

void SetInterval(const ParametricInterval& interval); virtual vec3 Evaluate(const vec2& domain) const = 0;

virtual vec3 Evaluate(const vec2& domain) const = 0; private:

vec2 ComputeDomain(float i, float j) const;

vec2 m_upperBound;

ivec2 m_slices;

ivec2 m_divisions;

};

private:

vec2 ComputeDomain(float i, float j) const;

vec2 m_upperBound;

ivec2 m_slices;

ivec2 m_divisions;

};

I’ll explain the ISurface

interface later; first let’s take a look at various elements that are

controlled by subclasses:

Example 3-12

shows the implementation of the ParametricSurface

class. Add a new C++ file to your

Xcode project called ParametricSurface.cpp (but

deselect the option to create the associated header file). Replace

everything in it with the code shown.

#include "ParametricSurface.hpp"

void ParametricSurface::SetInterval(const ParametricInterval& interval)

{

m_upperBound = interval.UpperBound;

m_divisions = interval.Divisions;

m_slices = m_divisions - ivec2(1, 1);

}

int ParametricSurface::GetVertexCount() const

{

return m_divisions.x * m_divisions.y;

}

int ParametricSurface::GetLineIndexCount() const

{

return 4 * m_slices.x * m_slices.y;

}

vec2 ParametricSurface::ComputeDomain(float x, float y) const

{

return vec2(x * m_upperBound.x / m_slices.x,

y * m_upperBound.y / m_slices.y);

}

void ParametricSurface::GenerateVertices(vector<float>& vertices) const

{

vertices.resize(GetVertexCount() * 3);

vec3* position = (vec3*) &vertices[0];

for (int j = 0; j < m_divisions.y; j++) {

for (int i = 0; i < m_divisions.x; i++) {

vec2 domain = ComputeDomain(i, j);

vec3 range = Evaluate(domain);

*position++ = range;

}

}

}

void ParametricSurface::GenerateLineIndices(vector<unsigned short>& indices) const

{

indices.resize(GetLineIndexCount());

vector<unsigned short>::iterator index = indices.begin();

for (int j = 0, vertex = 0; j < m_slices.y; j++) {

for (int i = 0; i < m_slices.x; i++) {

int next = (i + 1) % m_divisions.x;

*index++ = vertex + i;

*index++ = vertex + next;

*index++ = vertex + i;

*index++ = vertex + i + m_divisions.x;

}

vertex += m_divisions.x;

}

}

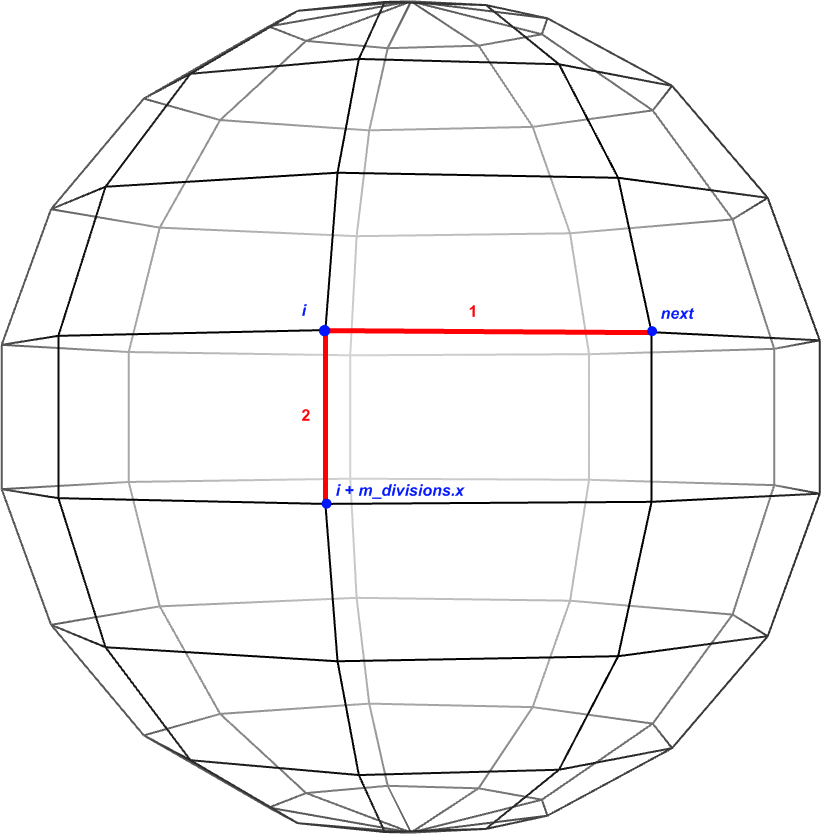

The GenerateLineIndices

method deserves a bit of an explanation. Picture a globe of the earth

and how it has lines for latitude and longitude. The first two indices

in the loop correspond to a latitudinal line segment; the latter two

correspond to a longitudinal line segment (see Figure 3-5). Also note some sneaky usage of the modulo

operator for wrapping back to zero when closing a loop.

Designing the Interfaces

In the HelloCone and HelloArrow samples, you

might have noticed some duplication of logic between the ES 1.1 and ES

2.0 backends. With the wireframe viewer sample, we’re raising the bar on

complexity, so we’ll avoid duplicated code by introducing a new C++

component called ApplicationEngine (this was

mentioned in Chapter 1; see Figure 1-5). The application engine will contain all the

logic that isn’t coupled to a particular graphics API.

Example 3-13 shows the

contents of Interfaces.hpp, which defines three

component interfaces and some related types. Add a new C and C++ header

file to your Xcode project called Interfaces.hpp.

Replace everything in it with the code shown.

#pragma once

#include "Vector.hpp"

#include "Quaternion.hpp"

#include <vector>

using std::vector;

struct IApplicationEngine { virtual void Initialize(int width, int height) = 0;

virtual void Render() const = 0;

virtual void UpdateAnimation(float timeStep) = 0;

virtual void OnFingerUp(ivec2 location) = 0;

virtual void OnFingerDown(ivec2 location) = 0;

virtual void OnFingerMove(ivec2 oldLocation, ivec2 newLocation) = 0;

virtual ~IApplicationEngine() {}

};

struct ISurface {

virtual void Initialize(int width, int height) = 0;

virtual void Render() const = 0;

virtual void UpdateAnimation(float timeStep) = 0;

virtual void OnFingerUp(ivec2 location) = 0;

virtual void OnFingerDown(ivec2 location) = 0;

virtual void OnFingerMove(ivec2 oldLocation, ivec2 newLocation) = 0;

virtual ~IApplicationEngine() {}

};

struct ISurface { virtual int GetVertexCount() const = 0;

virtual int GetLineIndexCount() const = 0;

virtual void GenerateVertices(vector<float>& vertices) const = 0;

virtual void GenerateLineIndices(vector<unsigned short>& indices) const = 0;

virtual ~ISurface() {}

};

struct Visual {

virtual int GetVertexCount() const = 0;

virtual int GetLineIndexCount() const = 0;

virtual void GenerateVertices(vector<float>& vertices) const = 0;

virtual void GenerateLineIndices(vector<unsigned short>& indices) const = 0;

virtual ~ISurface() {}

};

struct Visual { vec3 Color;

ivec2 LowerLeft;

ivec2 ViewportSize;

Quaternion Orientation;

};

struct IRenderingEngine {

vec3 Color;

ivec2 LowerLeft;

ivec2 ViewportSize;

Quaternion Orientation;

};

struct IRenderingEngine { virtual void Initialize(const vector<ISurface*>& surfaces) = 0;

virtual void Render(const vector<Visual>& visuals) const = 0;

virtual ~IRenderingEngine() {}

};

IApplicationEngine* CreateApplicationEngine(IRenderingEngine* renderingEngine);

virtual void Initialize(const vector<ISurface*>& surfaces) = 0;

virtual void Render(const vector<Visual>& visuals) const = 0;

virtual ~IRenderingEngine() {}

};

IApplicationEngine* CreateApplicationEngine(IRenderingEngine* renderingEngine); namespace ES1 { IRenderingEngine* CreateRenderingEngine(); }

namespace ES1 { IRenderingEngine* CreateRenderingEngine(); } namespace ES2 { IRenderingEngine* CreateRenderingEngine(); }

namespace ES2 { IRenderingEngine* CreateRenderingEngine(); }

Consumed by

GLView; contains logic common to both rendering backends.

Consumed by the rendering engines when they generate VBOs for the parametric surfaces.

Describes the dynamic visual properties of a surface; gets passed from the application engine to the rendering engine at every frame.

Factory method for the application engine; the caller determines OpenGL capabilities and passes in the appropriate rendering engine.

Namespace-qualified factory methods for the two rendering engines.

In an effort to move as much logic into the

application engine as possible, IRenderingEngine has only two methods:

Initialize and Render. We’ll

describe them in detail later.

Handling Trackball Rotation

To ensure high portability of the application

logic, we avoid making any OpenGL calls whatsoever from within the

ApplicationEngine class. Example 3-14 is the complete listing of its

initial implementation. Add a new C++ file to your Xcode project called

ApplicationEngine.cpp (deselect the option to

create the associated .h file). Replace everything

in it with the code shown.

#include "Interfaces.hpp"

#include "ParametricEquations.hpp"

using namespace std;

static const int SurfaceCount = 6;

class ApplicationEngine : public IApplicationEngine {

public:

ApplicationEngine(IRenderingEngine* renderingEngine);

~ApplicationEngine();

void Initialize(int width, int height);

void OnFingerUp(ivec2 location);

void OnFingerDown(ivec2 location);

void OnFingerMove(ivec2 oldLocation, ivec2 newLocation);

void Render() const;

void UpdateAnimation(float dt);

private:

vec3 MapToSphere(ivec2 touchpoint) const;

float m_trackballRadius;

ivec2 m_screenSize;

ivec2 m_centerPoint;

ivec2 m_fingerStart;

bool m_spinning;

Quaternion m_orientation;

Quaternion m_previousOrientation;

IRenderingEngine* m_renderingEngine;

};

IApplicationEngine* CreateApplicationEngine(IRenderingEngine* renderingEngine)

{

return new ApplicationEngine(renderingEngine);

}

ApplicationEngine::ApplicationEngine(IRenderingEngine* renderingEngine) :

m_spinning(false),

m_renderingEngine(renderingEngine)

{

}

ApplicationEngine::~ApplicationEngine()

{

delete m_renderingEngine;

}

void ApplicationEngine::Initialize(int width, int height)

{

m_trackballRadius = width / 3;

m_screenSize = ivec2(width, height);

m_centerPoint = m_screenSize / 2;

vector<ISurface*> surfaces(SurfaceCount);

surfaces[0] = new Cone(3, 1);

surfaces[1] = new Sphere(1.4f);

surfaces[2] = new Torus(1.4, 0.3);

surfaces[3] = new TrefoilKnot(1.8f);

surfaces[4] = new KleinBottle(0.2f);

surfaces[5] = new MobiusStrip(1);

m_renderingEngine->Initialize(surfaces);

for (int i = 0; i < SurfaceCount; i++)

delete surfaces[i];

}

void ApplicationEngine::Render() const

{

Visual visual;

visual.Color = m_spinning ? vec3(1, 1, 1) : vec3(0, 1, 1);

visual.LowerLeft = ivec2(0, 48);

visual.ViewportSize = ivec2(320, 432);

visual.Orientation = m_orientation;

m_renderingEngine->Render(&visual);

}

void ApplicationEngine::UpdateAnimation(float dt)

{

}

void ApplicationEngine::OnFingerUp(ivec2 location)

{

m_spinning = false;

}

void ApplicationEngine::OnFingerDown(ivec2 location)

{

m_fingerStart = location;

m_previousOrientation = m_orientation;

m_spinning = true;

}

void ApplicationEngine::OnFingerMove(ivec2 oldLocation, ivec2 location)

{

if (m_spinning) {

vec3 start = MapToSphere(m_fingerStart);

vec3 end = MapToSphere(location);

Quaternion delta = Quaternion::CreateFromVectors(start, end);

m_orientation = delta.Rotated(m_previousOrientation);

}

}

vec3 ApplicationEngine::MapToSphere(ivec2 touchpoint) const

{

vec2 p = touchpoint - m_centerPoint;

// Flip the y-axis because pixel coords increase toward the bottom.

p.y = -p.y;

const float radius = m_trackballRadius;

const float safeRadius = radius - 1;

if (p.Length() > safeRadius) {

float theta = atan2(p.y, p.x);

p.x = safeRadius * cos(theta);

p.y = safeRadius * sin(theta);

}

float z = sqrt(radius * radius - p.LengthSquared());

vec3 mapped = vec3(p.x, p.y, z);

return mapped / radius;

}

The bulk of Example 3-14 is dedicated to handling the

trackball-like behavior with quaternions. I find the

CreateFromVectors method to be the most natural way

of constructing a quaternion. Recall that it takes two unit vectors at

the origin and computes the quaternion that moves the first vector onto

the second. To achieve a trackball effect, these two vectors are

generated by projecting touch points onto the surface of the virtual

trackball (see the MapToSphere method). Note that if

a touch point is outside the circumference of the trackball (or directly

on it), then MapToSphere snaps the touch point to

just inside the circumference. This allows the user to perform a

constrained rotation around the z-axis by sliding his finger

horizontally or vertically near the edge of the screen.

Implementing the Rendering Engine

So far we’ve managed to exhibit most of the wireframe viewer code without any OpenGL whatsoever! It’s time to remedy that by showing the ES 1.1 backend class in Example 3-15. Add a new C++ file to your Xcode project called RenderingEngine.ES1.cpp (deselect the option to create the associated .h file). Replace everything in it with the code shown. You can download the ES 2.0 version from this book’s companion website (and it is included with the skeleton project mentioned early in this section).

#include <OpenGLES/ES1/gl.h>

#include <OpenGLES/ES1/glext.h>

#include "Interfaces.hpp"

#include "Matrix.hpp"

namespace ES1 {

struct Drawable {

GLuint VertexBuffer;

GLuint IndexBuffer;

int IndexCount;

};

class RenderingEngine : public IRenderingEngine {

public:

RenderingEngine();

void Initialize(const vector<ISurface*>& surfaces);

void Render(const vector<Visual>& visuals) const;

private:

vector<Drawable> m_drawables;

GLuint m_colorRenderbuffer;

mat4 m_translation;

};

IRenderingEngine* CreateRenderingEngine()

{

return new RenderingEngine();

}

RenderingEngine::RenderingEngine()

{

glGenRenderbuffersOES(1, &m_colorRenderbuffer);

glBindRenderbufferOES(GL_RENDERBUFFER_OES, m_colorRenderbuffer);

}

void RenderingEngine::Initialize(const vector<ISurface*>& surfaces)

{

vector<ISurface*>::const_iterator surface;

for (surface = surfaces.begin();

surface != surfaces.end(); ++surface) {

// Create the VBO for the vertices.

vector<float> vertices;

(*surface)->GenerateVertices(vertices);

GLuint vertexBuffer;

glGenBuffers(1, &vertexBuffer);

glBindBuffer(GL_ARRAY_BUFFER, vertexBuffer);

glBufferData(GL_ARRAY_BUFFER,

vertices.size() * sizeof(vertices[0]),

&vertices[0],

GL_STATIC_DRAW);

// Create a new VBO for the indices if needed.

int indexCount = (*surface)->GetLineIndexCount();

GLuint indexBuffer;

if (!m_drawables.empty() &&

indexCount == m_drawables[0].IndexCount) {

indexBuffer = m_drawables[0].IndexBuffer;

} else {

vector<GLushort> indices(indexCount);

(*surface)->GenerateLineIndices(indices);

glGenBuffers(1, &indexBuffer);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, indexBuffer);

glBufferData(GL_ELEMENT_ARRAY_BUFFER,

indexCount * sizeof(GLushort),

&indices[0],

GL_STATIC_DRAW);

}

Drawable drawable = { vertexBuffer, indexBuffer, indexCount};

m_drawables.push_back(drawable);

}

// Create the framebuffer object.

GLuint framebuffer;

glGenFramebuffersOES(1, &framebuffer);

glBindFramebufferOES(GL_FRAMEBUFFER_OES, framebuffer);

glFramebufferRenderbufferOES(GL_FRAMEBUFFER_OES,

GL_COLOR_ATTACHMENT0_OES,

GL_RENDERBUFFER_OES,

m_colorRenderbuffer);

glBindRenderbufferOES(GL_RENDERBUFFER_OES, m_colorRenderbuffer);

glEnableClientState(GL_VERTEX_ARRAY);

m_translation = mat4::Translate(0, 0, -7);

}

void RenderingEngine::Render(const vector<Visual>& visuals) const

{

glClear(GL_COLOR_BUFFER_BIT);

vector<Visual>::const_iterator visual = visuals.begin();

for (int visualIndex = 0;

visual != visuals.end();

++visual, ++visualIndex)

{

// Set the viewport transform.

ivec2 size = visual->ViewportSize;

ivec2 lowerLeft = visual->LowerLeft;

glViewport(lowerLeft.x, lowerLeft.y, size.x, size.y);

// Set the model-view transform.

mat4 rotation = visual->Orientation.ToMatrix();

mat4 modelview = rotation * m_translation;

glMatrixMode(GL_MODELVIEW);

glLoadMatrixf(modelview.Pointer());

// Set the projection transform.

float h = 4.0f * size.y / size.x;

mat4 projection = mat4::Frustum(-2, 2, -h / 2, h / 2, 5, 10);

glMatrixMode(GL_PROJECTION);

glLoadMatrixf(projection.Pointer());

// Set the color.

vec3 color = visual->Color;

glColor4f(color.x, color.y, color.z, 1);

// Draw the wireframe.

int stride = sizeof(vec3);

const Drawable& drawable = m_drawables[visualIndex];

glBindBuffer(GL_ARRAY_BUFFER, drawable.VertexBuffer);

glVertexPointer(3, GL_FLOAT, stride, 0);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, drawable.IndexBuffer);

glDrawElements(GL_LINES, drawable.IndexCount, GL_UNSIGNED_SHORT, 0);

}

}

}There are no new OpenGL concepts here; you should be able to follow the code in Example 3-15. We now have all the big pieces in place for the wireframe viewer. At this point, it shows only a single wireframe; this is improved in the coming sections.

Poor Man’s Tab Bar

Apple provides the

UITabBar widget as part of the UIKit framework. This

is the familiar list of gray icons that many applications have along the

bottom of the screen, as shown in Figure 3-6.

Since UIKit widgets are outside the scope of this book, you’ll be using OpenGL to create a poor man’s tab bar for switching between the various parametric surfaces, as in Figure 3-7.

In many situations like this, a standard

UITabBar is preferable since it creates a more

consistent look with other iPhone applications. But in our case, we’ll

create a fun transition effect: pushing a button will cause it to “slide

out” of the tab bar and into the main viewport. For this level of

control over rendering, UIKit doesn’t suffice.

The wireframe viewer has a total of six parametric surfaces, but the button bar has only five. When the user touches a button, we’ll swap its contents with the surface being displayed in the main viewport. This allows the application to support six surfaces with only five buttons.

The state for the five buttons and the button-detection code lives in the application engine. New lines in the class declaration from ApplicationEngine.cpp are shown in bold in Example 3-16. No modifications to the two rendering engines are required.

#include "Interfaces.hpp" #include "ParametricEquations.hpp" #include <algorithm> using namespace std; static const int SurfaceCount = 6; static const int ButtonCount = SurfaceCount - 1; class ApplicationEngine : public IApplicationEngine { public: ApplicationEngine(IRenderingEngine* renderingEngine); ~ApplicationEngine(); void Initialize(int width, int height); void OnFingerUp(ivec2 location); void OnFingerDown(ivec2 location); void OnFingerMove(ivec2 oldLocation, ivec2 newLocation); void Render() const; void UpdateAnimation(float dt); private: void PopulateVisuals(Visual* visuals) const; int MapToButton(ivec2 touchpoint) const; vec3 MapToSphere(ivec2 touchpoint) const; float m_trackballRadius; ivec2 m_screenSize; ivec2 m_centerPoint; ivec2 m_fingerStart; bool m_spinning; Quaternion m_orientation; Quaternion m_previousOrientation; IRenderingEngine* m_renderingEngine; int m_currentSurface; ivec2 m_buttonSize; int m_pressedButton; int m_buttonSurfaces[ButtonCount]; };

Example 3-17

shows the implementation. Methods left unchanged (such as MapToSphere) are omitted for brevity.

You’ll be replacing the following methods: ApplicationEngine::ApplicationEngine,

Initialize, Render,

OnFingerUp, OnFingerDown, and

OnFingerMove. There are two new methods you’ll be

adding: ApplicationEngine::PopulateVisuals and

MapToButton.

ApplicationEngine::ApplicationEngine(IRenderingEngine* renderingEngine) :

m_spinning(false),

m_renderingEngine(renderingEngine),

m_pressedButton(-1)

{

m_buttonSurfaces[0] = 0;

m_buttonSurfaces[1] = 1;

m_buttonSurfaces[2] = 2;

m_buttonSurfaces[3] = 4;

m_buttonSurfaces[4] = 5;

m_currentSurface = 3;

}

void ApplicationEngine::Initialize(int width, int height)

{

m_trackballRadius = width / 3;

m_buttonSize.y = height / 10;

m_buttonSize.x = 4 * m_buttonSize.y / 3;

m_screenSize = ivec2(width, height - m_buttonSize.y);

m_centerPoint = m_screenSize / 2;

vector<ISurface*> surfaces(SurfaceCount);

surfaces[0] = new Cone(3, 1);

surfaces[1] = new Sphere(1.4f);

surfaces[2] = new Torus(1.4f, 0.3f);

surfaces[3] = new TrefoilKnot(1.8f);

surfaces[4] = new KleinBottle(0.2f);

surfaces[5] = new MobiusStrip(1);

m_renderingEngine->Initialize(surfaces);

for (int i = 0; i < SurfaceCount; i++)

delete surfaces[i];

}

void ApplicationEngine::PopulateVisuals(Visual* visuals) const

{

for (int buttonIndex = 0; buttonIndex < ButtonCount; buttonIndex++) {

int visualIndex = m_buttonSurfaces[buttonIndex];

visuals[visualIndex].Color = vec3(0.75f, 0.75f, 0.75f);

if (m_pressedButton == buttonIndex)

visuals[visualIndex].Color = vec3(1, 1, 1);

visuals[visualIndex].ViewportSize = m_buttonSize;

visuals[visualIndex].LowerLeft.x = buttonIndex * m_buttonSize.x;

visuals[visualIndex].LowerLeft.y = 0;

visuals[visualIndex].Orientation = Quaternion();

}

visuals[m_currentSurface].Color = m_spinning ? vec3(1, 1, 1) : vec3(0, 1, 1);

visuals[m_currentSurface].LowerLeft = ivec2(0, 48);

visuals[m_currentSurface].ViewportSize = ivec2(320, 432);

visuals[m_currentSurface].Orientation = m_orientation;

}

void ApplicationEngine::Render() const

{

vector<Visual> visuals(SurfaceCount);

PopulateVisuals(&visuals[0]);

m_renderingEngine->Render(visuals);

}

void ApplicationEngine::OnFingerUp(ivec2 location)

{

m_spinning = false;

if (m_pressedButton != -1 && m_pressedButton == MapToButton(location))

swap(m_buttonSurfaces[m_pressedButton], m_currentSurface);

m_pressedButton = -1;

}

void ApplicationEngine::OnFingerDown(ivec2 location)

{

m_fingerStart = location;

m_previousOrientation = m_orientation;

m_pressedButton = MapToButton(location);

if (m_pressedButton == -1)

m_spinning = true;

}

void ApplicationEngine::OnFingerMove(ivec2 oldLocation, ivec2 location)

{

if (m_spinning) {

vec3 start = MapToSphere(m_fingerStart);

vec3 end = MapToSphere(location);

Quaternion delta = Quaternion::CreateFromVectors(start, end);

m_orientation = delta.Rotated(m_previousOrientation);

}

if (m_pressedButton != -1 && m_pressedButton != MapToButton(location))

m_pressedButton = -1;

}

int ApplicationEngine::MapToButton(ivec2 touchpoint) const

{

if (touchpoint.y < m_screenSize.y - m_buttonSize.y)

return -1;

int buttonIndex = touchpoint.x / m_buttonSize.x;

if (buttonIndex >= ButtonCount)

return -1;

return buttonIndex;

}

Go ahead and try it—at this point, the wireframe viewer is starting to feel like a real application!

Animating the Transition

The button-swapping strategy is clever but possibly jarring to users; after playing with the app for a while, the user might start to notice that his tab bar is slowly being re-arranged. To make the swap effect more obvious and to give the app more of a fun Apple feel, let’s create a transition animation that actually shows the button being swapped with the main viewport. Figure 3-8 depicts this animation.

Again, no changes to the two rendering

engines are required, because all the logic can be constrained to

ApplicationEngine. In addition to animating the

viewport, we’ll also animate the color (the tab bar wireframes are drab

gray) and the orientation (the tab bar wireframes are all in the “home”

position). We can reuse the existing Visual class for

this; we need two sets of Visual objects for the

start and end of the animation. While the animation is active, we’ll

tween the values between the starting and ending visuals. Let’s also

create an Animation structure to bundle the visuals

with a few other animation parameters, as shown in bold in Example 3-18.

Example 3-19

shows the new implementation of ApplicationEngine.

Unchanged methods are omitted for brevity. Remember, animation is all

about interpolation! The Render

method leverages the Lerp and

Slerp methods from our vector class library to

achieve the animation in a

surprisingly straightforward manner.

ApplicationEngine::ApplicationEngine(IRenderingEngine* renderingEngine) :

m_spinning(false),

m_renderingEngine(renderingEngine),

m_pressedButton(-1)

{

m_animation.Active = false;

// Same as in Example 3-17

....

}

void ApplicationEngine::Render() const

{

vector<Visual> visuals(SurfaceCount);

if (!m_animation.Active) {

PopulateVisuals(&visuals[0]);

} else {

float t = m_animation.Elapsed / m_animation.Duration;

for (int i = 0; i < SurfaceCount; i++) {

const Visual& start = m_animation.StartingVisuals[i];

const Visual& end = m_animation.EndingVisuals[i];

Visual& tweened = visuals[i];

tweened.Color = start.Color.Lerp(t, end.Color);

tweened.LowerLeft = start.LowerLeft.Lerp(t, end.LowerLeft);

tweened.ViewportSize = start.ViewportSize.Lerp(t, end.ViewportSize);

tweened.Orientation = start.Orientation.Slerp(t, end.Orientation);

}

}

m_renderingEngine->Render(visuals);

}

void ApplicationEngine::UpdateAnimation(float dt)

{

if (m_animation.Active) {

m_animation.Elapsed += dt;

if (m_animation.Elapsed > m_animation.Duration)

m_animation.Active = false;

}

}

void ApplicationEngine::OnFingerUp(ivec2 location)

{

m_spinning = false;

if (m_pressedButton != -1 && m_pressedButton == MapToButton(location) &&

!m_animation.Active)

{

m_animation.Active = true;

m_animation.Elapsed = 0;

m_animation.Duration = 0.25f;

PopulateVisuals(&m_animation.StartingVisuals[0]);

swap(m_buttonSurfaces[m_pressedButton], m_currentSurface);

PopulateVisuals(&m_animation.EndingVisuals[0]);

}

m_pressedButton = -1;

}

That completes the wireframe viewer! As you can see, animation isn’t difficult, and it can give your application that special Apple touch.

Wrapping Up

This chapter has been a quick exposition of the touchscreen and OpenGL vertex submission. The toy wireframe app is great fun, but it does have a bit of a 1980s feel to it. The next chapter takes iPhone graphics to the next level by explaining the depth buffer, exploring the use of real-time lighting, and showing how to load 3D content from the popular .obj file format. While this chapter has been rather heavy on code listings, the next chapter will be more in the style of Chapter 2, mixing in some math review with a lesson in OpenGL.

[3] True Möbius strips are one-sided surfaces and can cause complications with the lighting algorithms presented in the next chapter. The wireframe viewer actually renders a somewhat flattened Möbius “tube.”

Get iPhone 3D Programming now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.