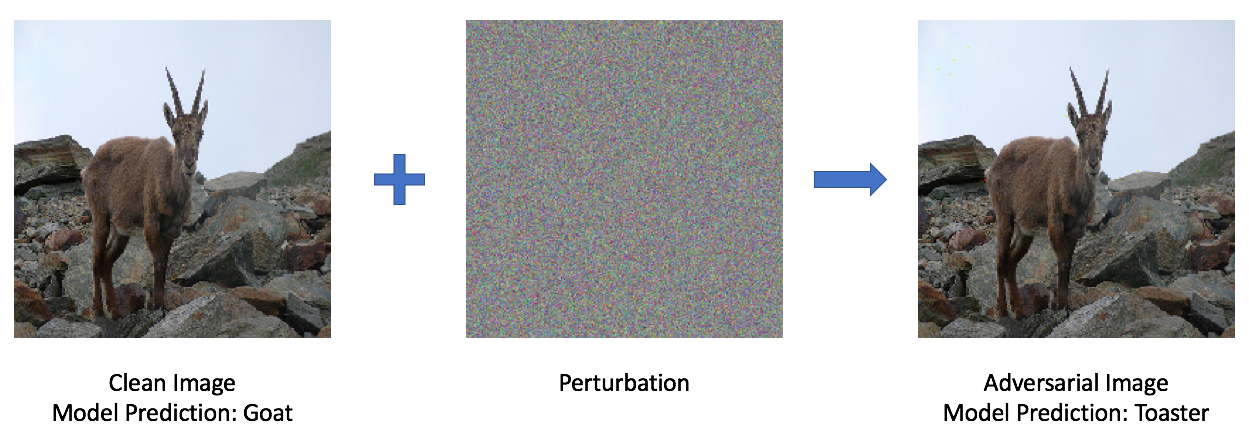

Deep learning algorithms have shown incredible results across numerous tasks, including computer vision, natural language processing, and speech recognition. In several tasks, deep learning has already surpassed human capabilities. However, recent work has shown that these algorithms are incredibly vulnerable to attacks. By attacks, we mean attempts to make imperceptible modifications to the input which causes the model to behave differently. Take the following example: