July 2017

Beginner to intermediate

378 pages

10h 26m

English

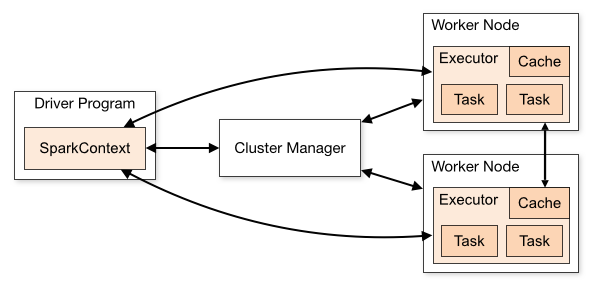

Spark operates across nodes in the cluster in a similar way as Hive. The job or set of jobs that you are executing over Spark is called an application. Applications run in Spark as independent sets of processes across the cluster. These are coordinated by the main component called the Driver. The key object that operates in the Driver is called SparkContent.

The following diagram is a very simple architecture diagram of Spark. Spark can connect to different types of cluster managers such as Apache Mesos, YARN, or its own simple Spark standalone cluster manager. YARN is the most common implementation.