December 2018

Beginner to intermediate

684 pages

21h 9m

English

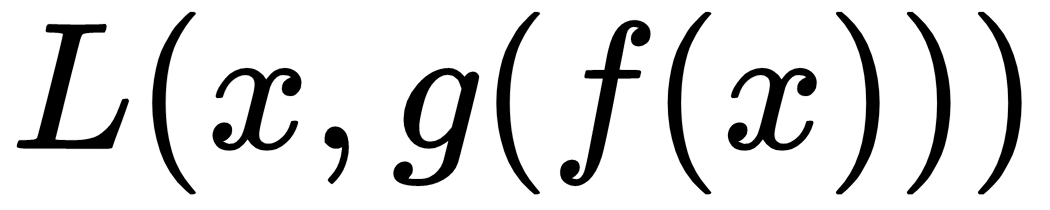

A traditional use case includes dimensionality reduction, achieved by limiting the size of the hidden layer so that it performs lossy compression. Such an autoencoder is called undercomplete and the purpose is to force it to learn the most salient properties of the data by minimizing a loss function, L, of the following form:

An example loss function that we will explore in the next section is simply the Mean Squared Error (MSE) evaluated on the pixel values of the input images and their reconstruction.

The appeal of autoencoders, when compared to linear dimensionality-reduction methods, such as Principal ...