December 2018

Beginner to intermediate

684 pages

21h 9m

English

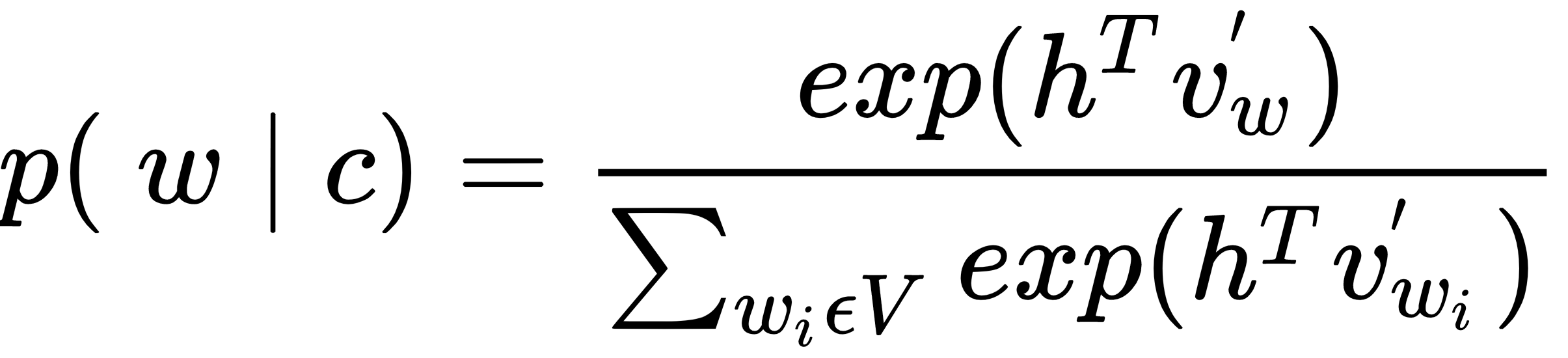

Word2vec models aim to predict a single word out of the potentially very large vocabulary. Neural networks often use the softmax function that maps any number of real values to an equal number of probabilities to implement the corresponding multiclass objective, where h refers to the embedding and v to the input vectors, and c is the context of word w:

However, the softmax complexity scales with the number of classes, as the denominator requires the computation of the dot product for all words in the vocabulary to standardize the probabilities. Word2vec models gain efficiency by using a simplified ...