December 2018

Beginner to intermediate

684 pages

21h 9m

English

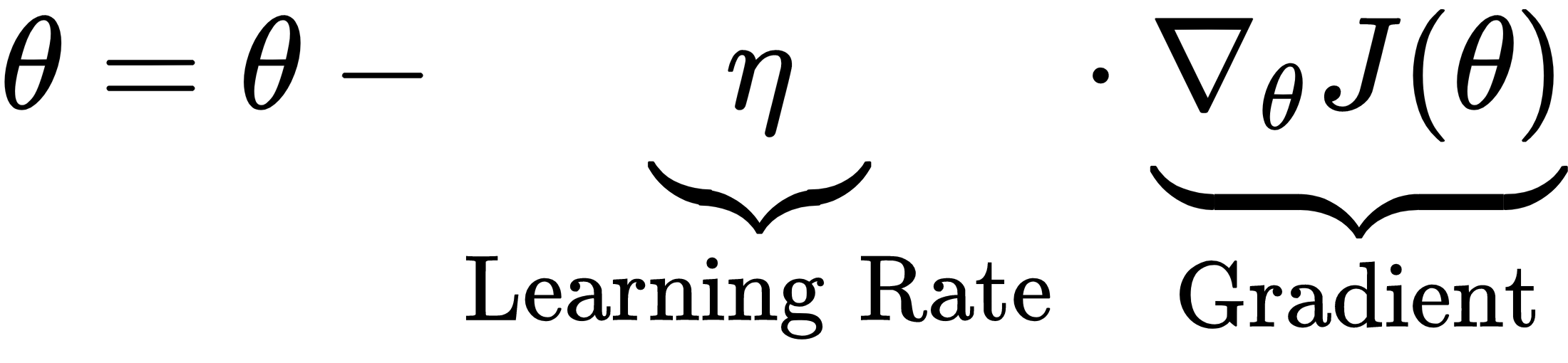

Gradient descent iteratively adjusts the neural network parameter using the information by the gradient. For a given parameter, θ, the basic gradient descent rule adjusts the value by the negative gradient of the loss function with respect to this parameter, multiplied by a learning rate, η, as follows:

The gradient can be evaluated for all training data, a randomized batch of data, or individual observations (called online learning). Random samples imply SGD, which often leads to faster convergence if random samples provide an unbiased estimate of the gradient direction at any point in the iterative process.

There are numerous challenges ...